Introducing "Chain-of-Draft" (CoD)

Zoom's research team recently released an insightful paper highlighting a new approach to save costs when leveraging "Chain-of-Thought" (CoT) prompting methods in large language models (LLMs). They're calling this innovative technique "Chain-of-Draft" (CoD), designed to optimize prompt effectiveness by making them succinct yet impactful.

Understanding Chain-of-Draft vs. Chain-of-Thought

To better grasp the efficiency of CoD, let's examine a simple arithmetic problem:

Problem: Jason had 20 lollipops. He gave Denny some lollipops. Now Jason has 12. How many did he give?

- Chain-of-Thought (traditional verbose approach):

- Detailed, step-by-step reasoning, typically involving multiple logical statements and explanations.

- Chain-of-Draft (new concise approach):

- Streamlined, succinct logic, focusing solely on essential steps without sacrificing accuracy.

Efficiency and Accuracy Gains

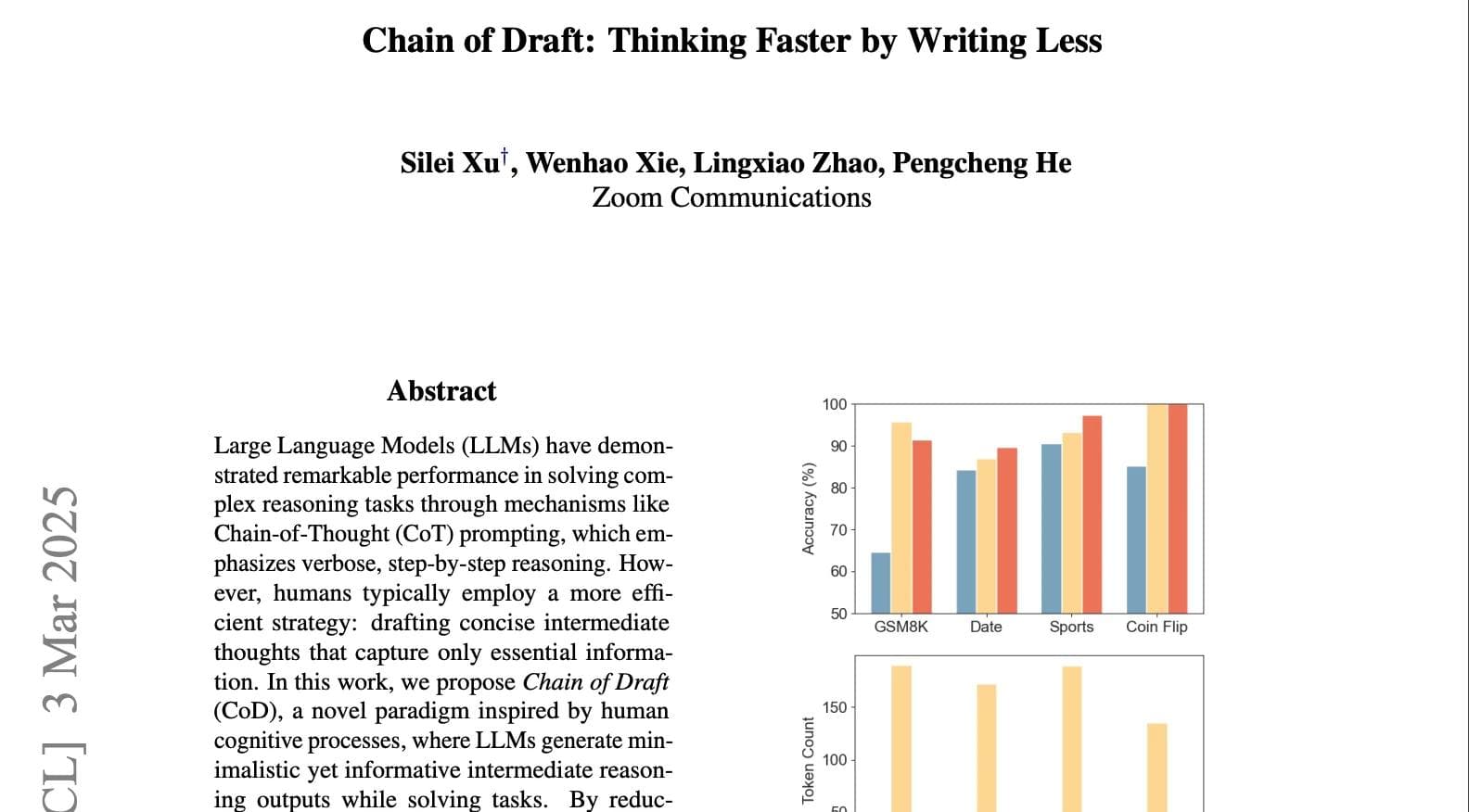

The Chain-of-Draft method significantly reduces the number of tokens generated per response—by approximately 80%. Despite this reduction in verbosity, CoD maintains an impressive accuracy rate of about 91%, closely trailing the 95%accuracy seen with traditional CoT prompting.

Practical Implications

For everyday individual use cases, the token savings from CoD might seem minor. However, at enterprise scale—where millions of prompts may be processed monthly—this token efficiency translates into substantial cost savings.

Limitations to Consider

The Chain-of-Draft approach does have limitations:

- If prompts lack clear examples or context, CoD's accuracy significantly decreases, often resulting in less useful outputs.

- Smaller language models benefit less from CoD and typically show decreased accuracy compared to larger models.

Conclusion

While cost-cutting solutions like CoD are beneficial, particularly at scale, it's crucial to evaluate your specific needs. For narrowly scoped, mission-critical tasks where accuracy is paramount, opting for the traditional Chain-of-Thought method might yield better outcomes, outweighing potential savings in token usage.

For those interested, the full research paper is available here: Chain of Draft: Thinking Faster by Writing Less.